Tech giants sign open letter against Terminator-style AI weapons

Tech giants sign open letter against Terminator-style AI weapons

Forget the R2-D2 jokes, Terminator references and sci-fi hype - autonomous weapons could very well be the "third revolution in warfare, after gunpowder and nuclear arms", and it's a threat some of the biggest names in technology and science are looking to stop.

Over 1,000 artificial intelligence experts and researchers have signed an open letter calling for a ban on "offensive autonomous weapons", looking to take the legs out of any possible military artificial intelligence arms race before it gets started.

Signatures on the letter to the International Joint Conference on Artificial Intelligence in Buenos Aries, Argentina, include those of Stephen Hawking, Tesla and Space X's Elon Musk and Apple co-founder Steve Wozniak - all uniting around a fear that this third revolution in warfare could see an increased loss in human life.

Published by the Future of Life Institute, the letter on autonomous weapons outlines that the new wave of AI weaponry is feasible within years:

"Many arguments have been made for and against autonomous weapons, for example that replacing human soldiers by machines is good by reducing casualties for the owner but bad by thereby lowering the threshold for going to battle. The key question for humanity today is whether to start a global AI arms race or to prevent it from starting."

"If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow. Unlike nuclear weapons, they require no costly or hard-to-obtain raw materials, so they will become ubiquitous and cheap for all significant military powers to mass-produce. It will only be a matter of time until they appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing, etc."

The letter is calling upon ethical approach to warfare, stating that those who sign it believe "that a military AI arms race would not be beneficial for humanity". It is not calling for an outright ban on all forms of AI in the battlefield, suggesting that certain uses can make battlefields safer for humans, particularly civilians, "without creating new tools for killing people."

Get exclusive shortlists, celebrity interviews and the best deals on the products you care about, straight to your inbox.

It proceeds to list comparable measures taken by chemists and physicists in the past, working to ban chemical and biological weapons and space-based nuclear weapons.

The bid to forestall an AI arms race might be coming too late: South Korea already has a series of automated gun turrets installed on its border with North Korea - a measure which still requires human input before a kill order is sent, but which has already set a precedent of installing automated systems to identify potential threats from a range of 3km and request a kill order.

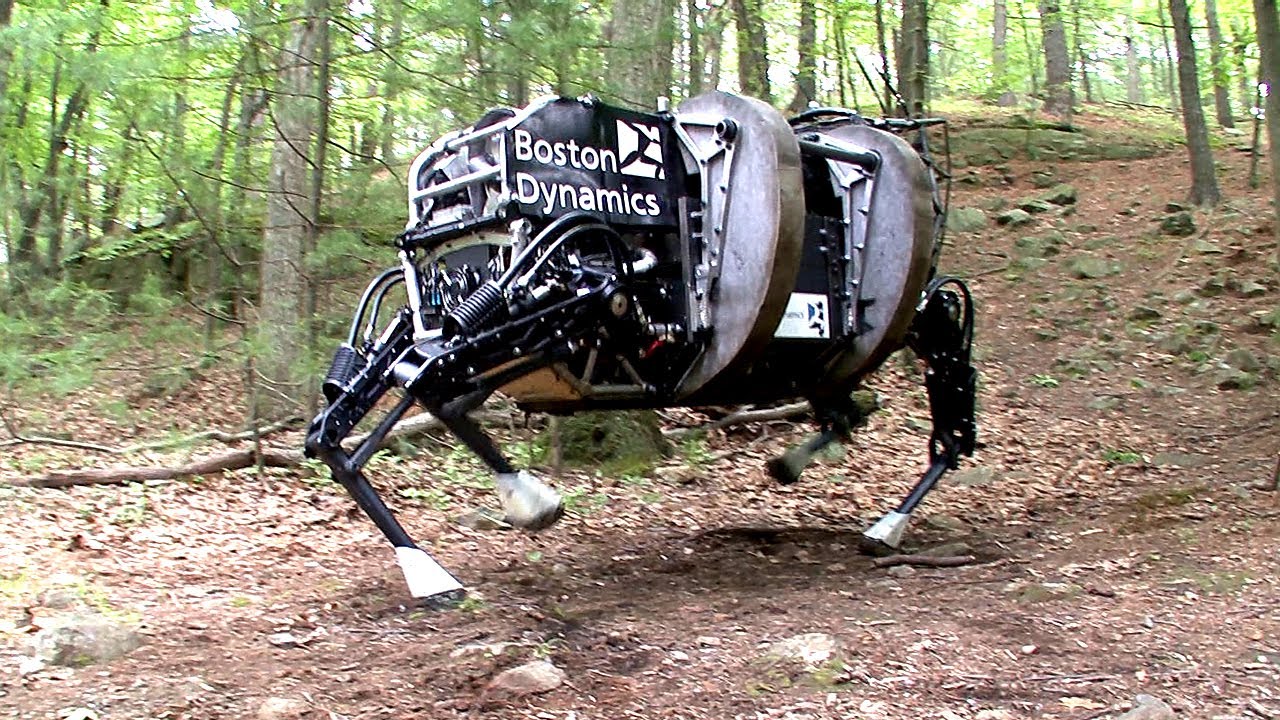

Groups such as Boston Dynamics have already started trials with the US military for using semi-autonomous robots to support personnel - including the Legged Squad Support System (seen below) which could feasibly be weaponised, carrying a payload or static gun onto battlefields.

The open letter is hoping that by creating a legal ban on development of "offensive autonomous weapons beyond meaningful human control" (a phrase we imagine will come in for a great deal of legal scrutiny), the once fictional horrors of Skynet won't become the weapons of tomorrow.

To add your own signature to the letter, head to the Future of Life website here.

Until a ban enters wider discussion, we'll be unplugging every electronic device we see acting shifty, just to be safe.

(Images: Rex)

[Via: The Guardian]

As a former Shortlist Staff Writer, Danielle spends most of her time compiling lists of the best ways to avoid using the Central Line at rush hour.